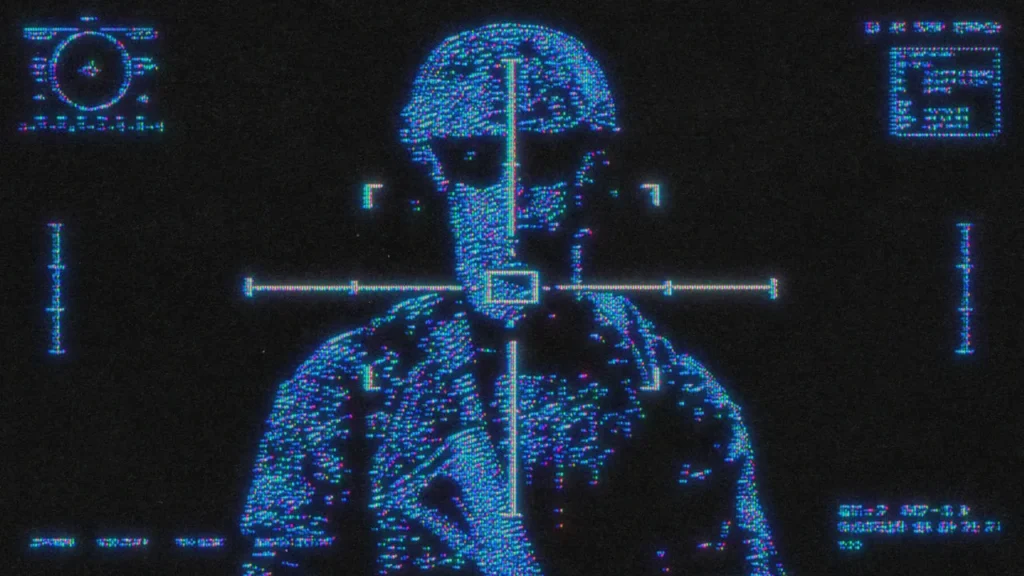

Russia's Use of Generative AI to Amplify Disinformation: A Growing Concern for Ukraine and the World

n the ongoing conflict between Russia and Ukraine, cyber warfare and disinformation have emerged as significant tools. Recently, Ukraine’s Deputy Foreign Minister, Anton Demokhin, highlighted a disturbing trend—the use of generative artificial intelligence (AI) by Russia to escalate its disinformation campaigns. Speaking at a cyber conference in Singapore, Demokhin emphasized that these AI-powered disinformation efforts pose a global threat, complicating efforts to counter the narratives and making it harder to distinguish fact from fiction.

A Shift Toward AI-Driven Disinformation

While cyberattacks have long been part of Russia’s strategy, Demokhin pointed out that disinformation campaigns are becoming more prominent. Traditionally, these campaigns relied on human-operated tactics like the creation of fake news websites, manipulated images, and misleading social media posts. However, with the advent of generative AI, disinformation is now being produced and distributed on a much larger and more sophisticated scale.

Generative AI can produce convincing texts, images, and even videos that mimic legitimate sources. This allows malicious actors to create a vast amount of false content quickly, which can be tailored to fit specific narratives and audiences. Demokhin stressed that this technology makes disinformation campaigns more challenging to detect, as AI can produce content that appears credible and genuine, increasing its reach and influence.

The Impact on Social Media

One of the key areas where AI-generated disinformation is making a significant impact is on social media platforms. According to Demokhin, Russia has been able to spread false narratives more effectively by using AI to simulate real users and generate fake posts, comments, and shares. These activities create an illusion of widespread support or dissent, which can sway public opinion and generate confusion among those trying to discern the truth.

The sophistication of AI-generated content makes it difficult for traditional fact-checking methods to keep up. Disinformation can be produced faster than it can be debunked, allowing false narratives to spread rapidly before any corrective action can be taken. Furthermore, the sheer volume of false information being created makes it overwhelming for users to navigate and discern what is accurate. This not only impacts individual perceptions but also weakens trust in social media platforms and traditional news sources.

Russia’s Broader Disinformation Strategy

The use of AI in disinformation is part of Russia’s broader strategy to destabilize Ukraine and undermine its international standing. Since the start of the conflict, Russian disinformation campaigns have aimed to erode Ukraine’s credibility, both domestically and globally. These campaigns are not limited to Ukraine but extend to the global audience, attempting to distort the international narrative and shift the blame for the ongoing war.

In August 2024, Ukrainian officials revealed that Russia’s FSB security service and military intelligence agency were actively targeting Ukrainians through online disinformation. By spreading false information about Ukraine’s government, military operations, and overall stability, Russia aims to sow discord among the Ukrainian populace, creating confusion and mistrust in the country’s leadership. This strategy seeks to weaken Ukraine from within while undermining international support for its cause.

The Global Threat of AI-Driven Disinformation

Ukraine is not the only target of these disinformation campaigns. The global nature of AI-driven disinformation poses a significant threat to other nations, particularly those with open democracies and free speech. Disinformation campaigns can exploit political divisions, amplify societal tensions, and undermine trust in institutions. The use of AI makes it easier for malicious actors to craft targeted narratives that appeal to specific demographics or political groups, creating the potential for widespread destabilization.

This new wave of disinformation also threatens the global media ecosystem. As AI-generated content becomes more realistic, it becomes increasingly difficult for news organizations, fact-checkers, and even government entities to separate authentic information from fabricated stories. This can lead to a breakdown in trust, where audiences become skeptical of all sources of information, further exacerbating the spread of disinformation.

Combating the Threat

Addressing the challenge posed by AI-driven disinformation will require coordinated efforts at both the national and international levels. Governments, tech companies, and civil society organizations must work together to develop tools that can detect and counter AI-generated disinformation. Social media platforms, in particular, have a crucial role to play in identifying and removing false content before it gains traction.

Additionally, educating the public about the dangers of disinformation and promoting digital literacy will be key in reducing its impact. As AI continues to evolve, so too must the strategies to counteract its misuse. While technology has created new avenues for disinformation, it can also be used to develop advanced detection systems that can flag and mitigate false narratives.